Table of Contents

Want to Boost Rankings?

Get a proposal along with expert advice and insights on the right SEO strategy to grow your business!

Get StartedGoogle wants us to believe its AI products, from Gemini to AI Overviews are becoming smarter every day. We have seen the demos, read the headlines and even tested some of these features ourselves.

At first, it looks like AI is evolving into an autonomous genius that can answer anything.

- The Illusion of Machine “Genius” Depends on Human Labor

- Quality Control in AI Is a Weak Link And Search Can’t Be Trusted Blindly

- Ethical & Editorial Guardrails Get Loosened — Fast

- Second-Order SEO Impacts: Brand Trust, Search Experience, and Visibility

- The Future: AI-Augmented Search Hinges on Sustainable, Ethical Human Input

- Closing Thought

Free SEO Audit: Uncover Hidden SEO Opportunities Before Your Competitors Do

Gain early access to a tailored SEO audit that reveals untapped SEO opportunities and gaps in your website.

But let’s pause. Is AI really that smart? Or is the “intelligence” we see in Google’s outputs propped up by human workers we don’t hear about?

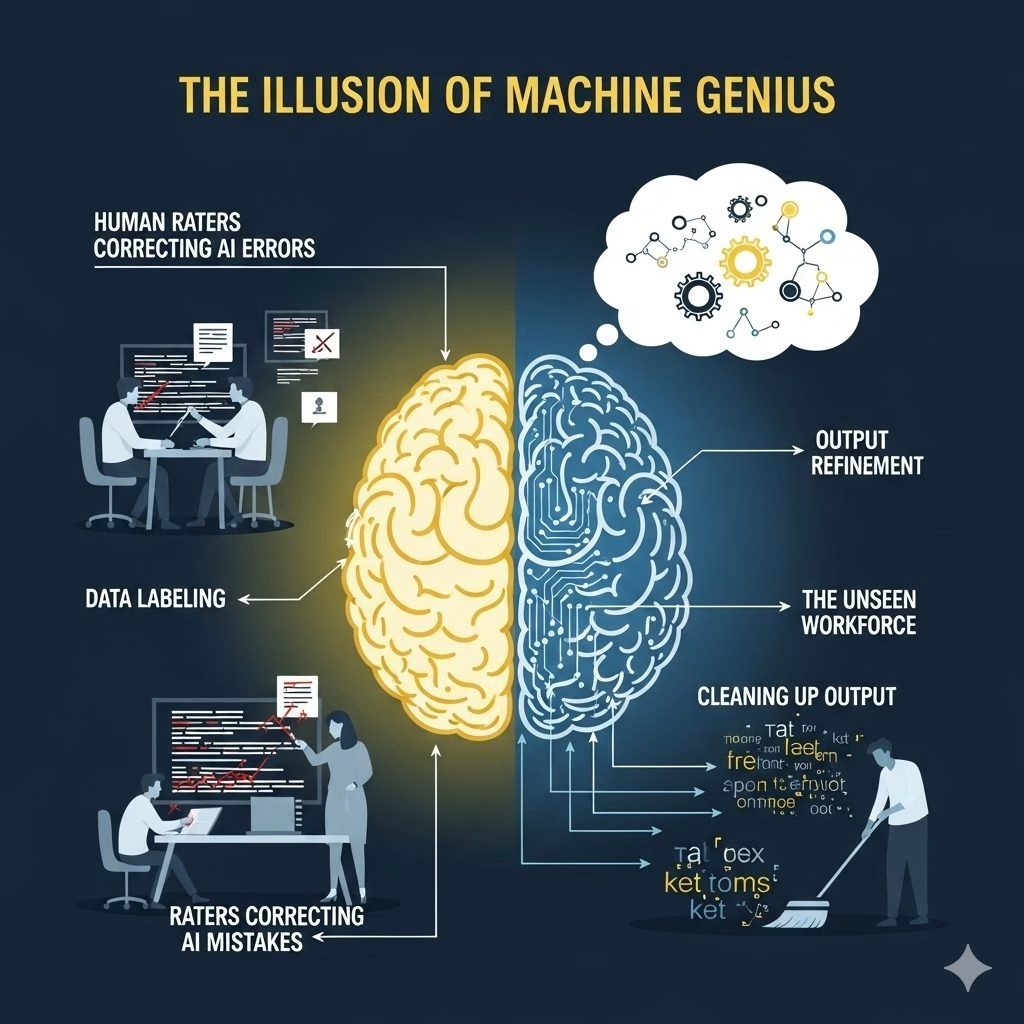

Behind every polished AI answer lies the unseen work of thousands of contracted human raters. These individuals clean up hallucinations, moderate unsafe content, and nudge AI outputs into something usable.

Yet their work is rushed, underpaid and increasingly unsustainable. And here is the catch: this hidden human labor has direct consequences for SEO, visibility and brand trust.

The Illusion of Machine “Genius” Depends on Human Labor

When Google revealed Gemini and rolled out AI Overviews, it positioned them as futuristic, almost magical tools.

But in reality, every AI system is only as good as the data and feedback behind it.

That’s where human raters come in. Google hires large numbers of contractors worldwide to act as invisible editors. Their tasks? Correct AI’s embarrassing mistakes, steer outputs away from harmful content and essentially “teach” the system not to look stupid.

Think of the infamous “glue on pizza” recommendation in AI Overviews. That mistake only made headlines because it slipped through the cracks. Normally, raters flag such absurdities and prevent them from ever reaching the user.

The takeaway: what looks like AI brilliance is, in truth, a hybrid of machine output and human clean-up.

Quality Control in AI Is a Weak Link And Search Can’t Be Trusted Blindly

The deadlines raters face have reportedly shrunk from around 30 minutes per task to as little as 10 minutes. That’s an enormous cut. Instead of carefully fact-checking, raters are forced to rush, prioritizing speed over accuracy.

Add to this the fact that raters often work outside their expertise. Someone reviewing medical answers may have zero healthcare background; another editing financial advice might have never invested in stocks.

The result? AI outputs shaped by workers with little context, under crushing time pressure.

This weak link means Google search can’t always be trusted blindly. The system’s “intelligence” is built on fragile, inconsistent checks, which creates risks in sensitive search categories.

For SEOs, this is a warning sign: if the quality of AI-driven results dips, your brand may be tied to misinformation or sloppy summaries without you even knowing it.

Ethical & Editorial Guardrails Get Loosened — Fast

AI companies love to talk about “safety.” But when speed and profit are at stake, guardrails often get quietly loosened.

Take content moderation. At launch, Google and others restrict outputs that might include hate speech, harmful misinformation, or explicit material. But over time, policies bend.

Content once forbidden is allowed if framed as “user input.” In other words: if you ask AI for unsafe content, it may deliver anyway.

For SEO, this matters deeply. Google’s ranking philosophy emphasizes E-E-A-T (Expertise, Experience, Authority, Trustworthiness).

But if AI outputs are shaped by stressed, undersupported raters — rather than true domain experts — the reliability of those signals weakens.

The net effect? User safety and content trust erode, and the brands appearing in AI answers are dragged into the fallout.

Second-Order SEO Impacts: Brand Trust, Search Experience, and Visibility

Let’s connect this back to your world: SEO.

If AI-generated search results are unreliable, everyone in the ecosystem — businesses, publishers and brands — takes a hit.

- Brand Trust: Imagine your blog on healthy eating being summarized as “eat more sugar for diabetes management.” That is not just wrong; it damages your brand’s reputation.

- Search Experience: Users burned by one bad AI answer will start distrusting not just AI Overviews but also snippets, knowledge panels and even organic results.

- Visibility: Publishers are already reporting revenue declines. Penske Media, owner of Rolling Stone and Variety, said Google’s AI Overviews have cut affiliate revenue by a third. Why? Because AI summaries lift their reporting, display it on the SERP, and keep users from clicking through.

SEO has always been about visibility and trust. Both are now at risk when AI’s scaffolding is built on fragile human labor.

The Future: AI-Augmented Search Hinges on Sustainable, Ethical Human Input

So what’s next? If raters remain underpaid and overworked, quality will keep slipping. And if quality slips, AI-powered search loses credibility — taking your brand along with it.

For SEOs, the future means:

- Demanding transparency. We need clear answers from Google: How are raters trained? What quality checks exist? Is this about accuracy or just speed?

- Monitoring SERP volatility. Pay attention to declines in AI answer quality, strange snippets, or factual errors. These may not be “algorithm updates” — they may be signals of rater burnout.

- Advocating for expertise. Push for sensitive verticals like healthcare, finance, and law to rely on expert domain raters, not just crowdsourced contractors.

The bottom line: sustainable AI search requires ethical, supported human labor. Without it, the trust that underpins search and SEO — falls apart.

Closing Thought

Google’s bid to seem “smarter” is, in reality, a story of human labor at scale. The polish of AI Overviews and Gemini hides an invisible workforce under pressure. And when those guardrails weaken, it’s not just Google that suffers — it’s brands, publishers, and every SEO professional counting on accurate visibility.

For SEOs, recognizing the human scaffolding behind AI isn’t just an ethical stance. It’s a strategic one. The trustworthiness of search results and by extension, your brand’s visibility — depends on it.

At Stan Ventures, we help businesses navigate these shifts, ensuring your SEO strategy accounts for the realities of AI-powered search. From protecting brand trust to building authority beyond Google, we’re here to future-proof your visibility. Book a consultation today and let’s build a strategy that thrives in the age of AI.

About the author

Share this article

Find out WHAT stops Google from ranking your website

We’ll have our SEO specialists analyze your website—and tell you what could be slowing down your organic growth.