Table of Contents

Want to Boost Rankings?

Get a proposal along with expert advice and insights on the right SEO strategy to grow your business!

Get StartedGoogle’s John Mueller is urging SEO professionals to prepare for a future where crawlers need verified access instead of relying on open scraping. To help SEOs take the first step, he shared Chris Lever’s guide on using Shopify’s new Web Bot Auth feature.

John Mueller has told SEO professionals that the way tools and crawlers access websites is likely to change. In a recent post on Bluesky, he shared a simple starter guide and said the topic of authentication for SEO tools will become increasingly important.

Free SEO Audit: Uncover Hidden SEO Opportunities Before Your Competitors Do

Gain early access to a tailored SEO audit that reveals untapped SEO opportunities and gaps in your website.

Authentication for your personal crawlers & SEO tools is going to be more and more of a topic. This is a simple guide to get started (though I imagine it’ll evolve significantly over time).

— John Mueller (@johnmu.com) September 22, 2025 at 6:47 PM

Mueller pointed readers to a practical walkthrough created by SEO consultant Chris Lever.

The guide explains how Shopify’s new Web Bot Auth feature can stop the long-standing problem of 429 Too Many Requests errors that interrupt large-scale site audits.

Why Google Is Signaling Change

Crawling has often relied on open access or IP-based workarounds. That system is breaking down as platforms limit anonymous scraping and tighten controls to prevent abuse.

Mueller’s comments suggest a move toward secure, signed requests that prove a crawler’s identity without depending on fixed IP addresses.

He also raised questions about tools powered by AI that mimic user browsing inside the browser. These assistants blur the line between a human visitor and an automated bot and could force new rules about how traffic is authenticated.

Discussions on such standards are underway through the Internet Engineering Task Force.

Shopify’s Answer to a Frustrating Problem

While Google discusses future standards, Shopify has implemented a practical solution.

Web Bot Auth lets merchants generate domain-specific keys inside the Shopify admin panel. SEOs add these keys as HTTP headers in tools like Screaming Frog and Sitebulb, which then run uninterrupted without triggering 429 errors.

The keys last up to three months and should be stored securely, similar to passwords.

Early testers say the feature has turned Shopify crawling from a time-consuming task into a smooth and predictable process. Some report saving more than ten hours a month across multiple stores.

Web Bot Auth also works for preview themes, making it easier to run prelaunch audits without issues.

How to Set Up Web Bot Auth:

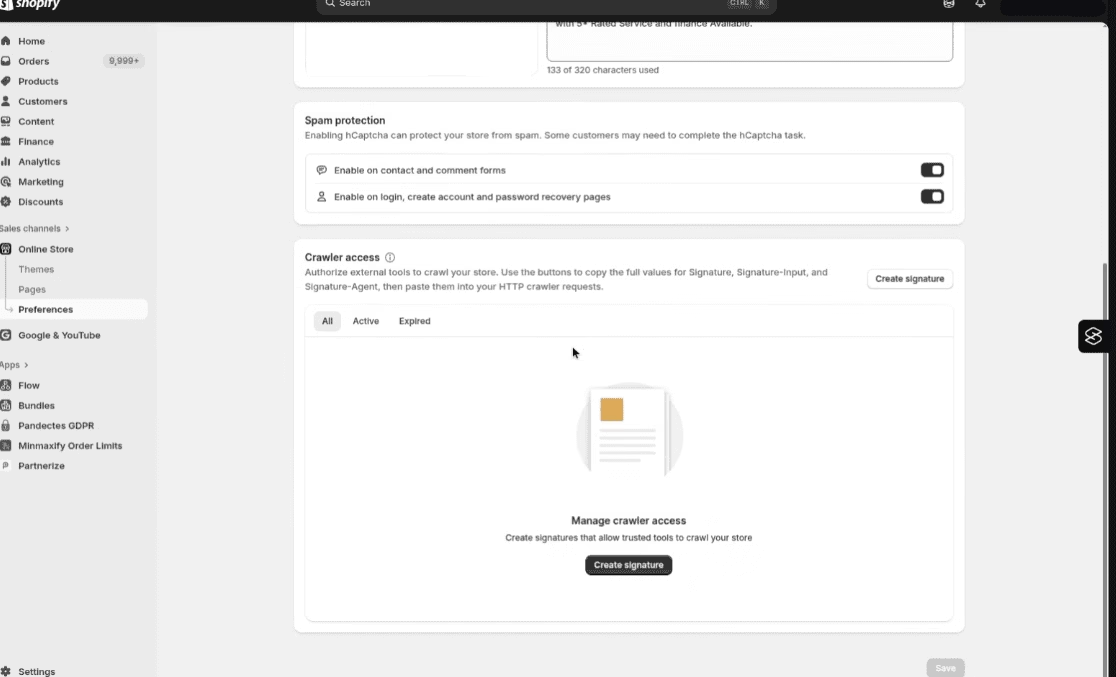

- Create a signature in Shopify – In Shopify Admin, go to Online Store → Preferences → Crawler Access. Click “Create signature,” name it, choose the domain, and set expiry (up to three months). Copy the three generated values: Signature, Signature-Input, and Signature-Agent.

- Configure Screaming Frog – In Screaming Frog, go to Configuration → HTTP Header. Add each header and its value exactly as Shopify provides. Save the settings and run your crawl.

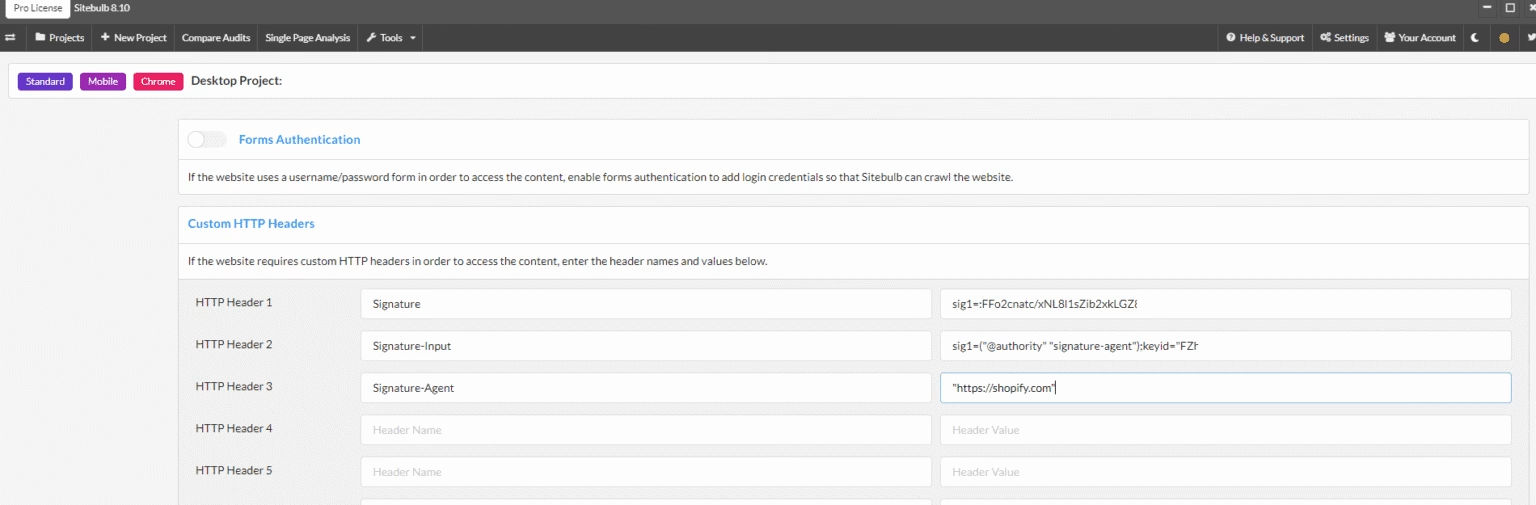

- Configure Sitebulb – Start a new audit in Sitebulb. Under Crawler Settings → Custom HTTP Headers, add the same three headers.

What This Means for SEOs

These changes indicate that SEO work is moving toward a more secure and controlled environment. Platforms are tightening how bots access their data, but they are also building pathways for professionals to keep working efficiently.

For SEOs, that means adapting now instead of waiting for stricter gates to go up. Tools that rely on unrestricted crawling may start failing as more sites expect authenticated requests. Learning to use features like Shopify’s Web Bot Auth and staying informed about how Google and standards groups shape authentication will help keep audits reliable and data complete.

It also means the line between “bot” and “user” is going to get sharper. If your workflow uses AI-assisted browsing or automated testing, expect new questions about how those systems identify themselves.

The upside is that these changes bring stability and trust.

Authenticated crawlers reduce false blocks, wasted hours, and incomplete reports.

By preparing early, SEO teams can work faster, avoid technical headaches, and stay aligned with the direction big platforms are heading.

Key Takeaways

- Google is preparing SEOs for an era where crawlers must verify their identity.

- Signed requests may replace IP-based access to websites.

- Shopify’s Web Bot Auth already solves crawl blocking and saves time.

- AI-driven assistants raise new questions about bot classification.

- Staying ahead of authentication trends protects future SEO workflows.

About the author

Share this article

Find out WHAT stops Google from ranking your website

We’ll have our SEO specialists analyze your website—and tell you what could be slowing down your organic growth.