Table of Contents

Older Google Algorithm Updates: 2023, 2022, 2021, 2020, 2019, Other Updates

BERT Update Live for 70 Languages – December 9, 2019

Google has officially announced the rollout of BERT (Bidirectional Encoder Representations from Transformers) in Google Search across 70 languages. Earlier in October 2019, Google rolled out BERT, touting it as the latest and most reliable language processing algorithm. The BERT has its origin from the Transformers project undertaken by Google engineers.

During the announcement of the BERT Algorithm Update, Google confirmed that its new language processing algorithm will try to understand words in relation to all the other words in a query, rather than one-by-one in order. This gives more impetus to the intent and context of the search query and delivers results that the user seeks.The Google SearchLiaison official tweet says, “BERT, our new way for Google Search to better understand language, is now rolling out to over 70 languages worldwide. It initially launched in Oct. for US English.”

Here is the list of languages that uses the BERT natural language processing algorithm to display Google search results:

Afrikaans, Albanian, Amharic, Arabic, Armenian, Azeri, Basque, Belarusian, Bulgarian, Catalan, Chinese (Simplified & Taiwan), Croatian, Czech, Danish, Dutch, English, Estonian, Farsi, Finnish, French, Galician, Georgian, German, Greek, Gujarati, Hebrew, Hindi, Hungarian, Icelandic, Indonesian, Italian, Japanese, Javanese, Kannada, Kazakh, Khmer, Korean, Kurdish, Kyrgyz, Lao, Latvian, Lithuanian, Macedonian Malay (Brunei Darussalam & Malaysia), Malayalam, Maltese, Marathi, Mongolian, Nepali, Norwegian, Polish, Portuguese, Punjabi, Romanian, Russian, Serbian, Sinhalese, Slovak, Slovenian, Swahili, Swedish, Tagalog, Tajik, Tamil, Telugu, Thai, Turkish, Ukrainian, Urdu, Uzbek, Vietnamese, and Spanish.

Want to see your website at the top? Don’t let your competitors outshine you. Take the first step towards dominating search rankings and watch your business grow. Get in touch with us now and let’s make your website a star!

Difference Between BERT and Neural Matching Algorithm

The announcement about the rollout of the November Local Search Algorithm Update by Google opened up a pandora of questions in the webmaster’s community. The whole hoo-ha about the update stems from the term “neural matching.”

It was only in September that Google announced the rollout of its BERT update, which is said to impact 10% of the search results. With another language processing algorithm update now put in place, the webmaster community is confused as to what difference both these updates will make on the SERP results.

Google has patented many language processing algorithms. The BERT and the Neural Matching are just two among them. The Neural Matching Algorithm was part of the search results since 2018. However, this has been upgraded with the BERT update in 2019 September.

As of now, Google has not confirmed whether the Neural Matching Algorithm was replaced by the BERT or if they are working in tandem. But the factors that each of these algorithms uses to rank websites are different.

The BERT Algorithm is the derivation from Google’s ambitions project Transformers – a novel neural network architecture developed by Google engineers. The BERT tries to decode the relatedness and context of the search terms through a process of masking. It tries to find the relation of each word by taking into consideration the predictions given by the masked search terms.

Talking about Neural Matching, the algorithm is closely related to research that Google did on fetching highly relevant documents on the web. The idea here is to primarily understand how words are related to concepts.

The Neural Matching algorithm uses a super-synonym system to understand what the user meant by typing in the search query. This enables users to get highly relevant local search results even if the exact terms don’t appear in the search query.

When it comes to local business owners, the Neural Matching algorithm will better rank businesses even though their business name or description aren’t optimized based on the user queries. Neural Matching algorithm in Local Search results will be a boon to businesses as the primary ranking factor will be the relatedness of the words and concept.

Basically, the BERT and Neural Matching Algorithms have different functional procedures and are used in different verticals of Google. However, both these algorithms are trained to fulfill Google’s core philosophy – to make the search results highly relevant.

Local Search Algorithm Update – November 2019

Google has confirmed that the fluctuations in the organic search that were reported throughout November were the result of it rolling out the Nov. 2019 Local Search Update – the official name coined by Google. There was a lot of discussion about possible algorithm update during the first week of November and the last week. However, Google failed to comment on this until December 2nd. The official announcement about the update came via a tweet by Danny Sullivan through the official Google SearchLiaison twitter handle. The tweet read:

However, Google also said that webmasters need not make any changes to the site as this local algorithm update is all about improving the relevance of the search based on the user intent. The search engine giant also confirmed that the local search algorithm update has a worldwide impact across all languages.

The new update helps users find the most relevant results for local search that matches their search intent. However, Google has been using this for displaying search results since 2018, primarily to understand how words are related to concepts.

The new algorithm now understands the concept behind the search by understanding how the words in the search query are closely related to each other. Google says it has a massive directory of synonyms that help the algorithm to do that neural matching.

Starting November 2019, Google uses its AI-based Neural matching system to rank businesses in Local Search results. Until recently, Google was using the exact words found on a business name or description to rank websites on local search.

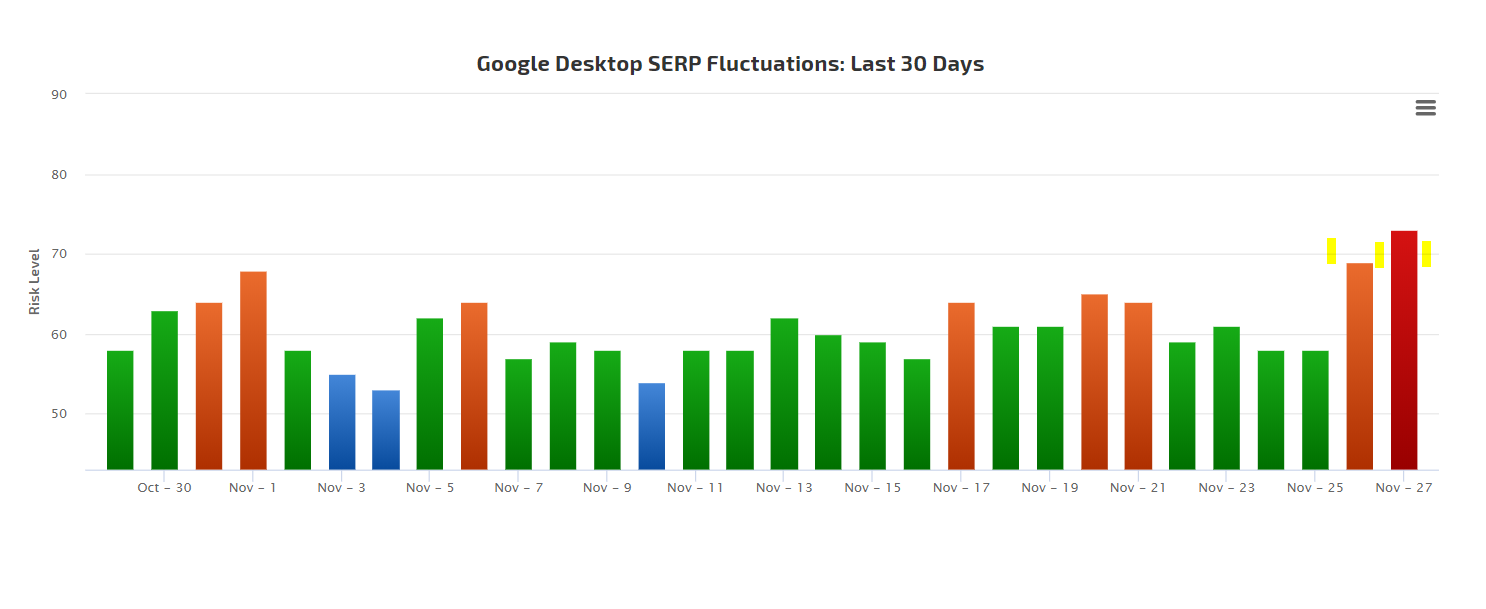

Unconfirmed Google Algorithm Update – November 27, 2019

There was a major tremor in some algorithm trackers and this could be an indication of another Core Update that could be as significant as the one rolled out on November 8, 2019. There are a few algorithm trackers that picked up the heat and some were just showing a little spike as of 27th.

Unconfirmed Google Algorithm Update – November 8, 2019

There is a lot of chatter in the SEO arena about a major shift in rankings of websites during the second week of November. However, there is no official confirmation about this from Google, which means it could be a significant Core Update that Google has confirmed happens hundreds of times a year.The chatters were more focused on websites that came under categories such as recipes, travel, and web design. A closer look into some of these sites revealed that there were no major on-page issues. That said, a deeper link analysis gave us a fair bit of idea about the pertinent question, “WHY US?”

Recipe sites, Travel Blogs, and Web Design companies get a lot of footer links, and most of the time, they are out of context. This, according to the Google link scheme document, is a spammy practice. According to Google, “widely distributed links in the footers or templates of various sites” will be counted as unnatural links. This may have played spoilsport, resulting in a drop in rankings.

After the chatters online, Google came up with an official confirmation via a tweet on the SearchLiason Twitter handle, stating that there have not been any broad updates in the past weeks. However, the tweet once again reiterates that there are several updates that happen on a regular basis.

In the Twitter thread, Google also gave examples of the type of algorithm updates that will have a far-reaching impact on search and how the search engine giant informs webmasters prior to the launch of such updates to ensure that they are prepared.

Only a few Algorithm trackers have registered the impact:

![]()

![]()

![]()

![]()

![]()

Google BERT Algorithm Update – October 2019

It’s been close to five years since Google announced anything as significant as the BERT Update. The last time an update of this magnitude was launched was back in 2016 when the RankBrain algorithm was rolled out.According to the official announcement of Google, the new BERT update impacts 10% of overall search results, across all languages. The statement says that BERT is the most significant leap forward in the past five years, and one of the biggest leaps ahead in the history of search.

With so much emphasis given to the latest Google Algorithm Update – BERT, it’s most likely going into the SEO history books along with its predecessors, Penguin, Panda, Hummingbird, and RankBrian. The update will affect 1 out of 10 organic search results on Google search, with a major impact on Search Snippets, aka Featured Snippets.Bidirectional Encoder Representations from Transformers, codenamed BERT, is a machine learning advancement made by Google involving it’s Artificial Intelligence innovation efforts. BERT model processes words in relation to all the other words in a sentence, rather than one-by-one in order. This gives more impetus to the intent and context of the search query and delivers results that the user seeks.

The announcement about the BERT update was made through the official Twitter handle of the Google SearchLaison. The tweet read, “Meet BERT, a new way for Google Search to better understand language and improve our search results. It’s now being used in the US in English, helping with one out of every ten searches. It will come to more counties and languages in the future.”

The new BERT update makes Google one step closer to achieving perfection in understanding the natural language. This also means that the voice search results will see significant improvement.

To know more about Google’s BERT Update, read our extensive coverage on its origin, concept, and impact on Google search results.

September 2019 Core Algorithm Update Starts Rolling Out

Google confirmed the rollout of the September 2019 Core Update via its SearchLaison Twitter handle. The tweet read: “The September 2019 Core Update is now live and will be rolling out across our various data centers over the coming days.”

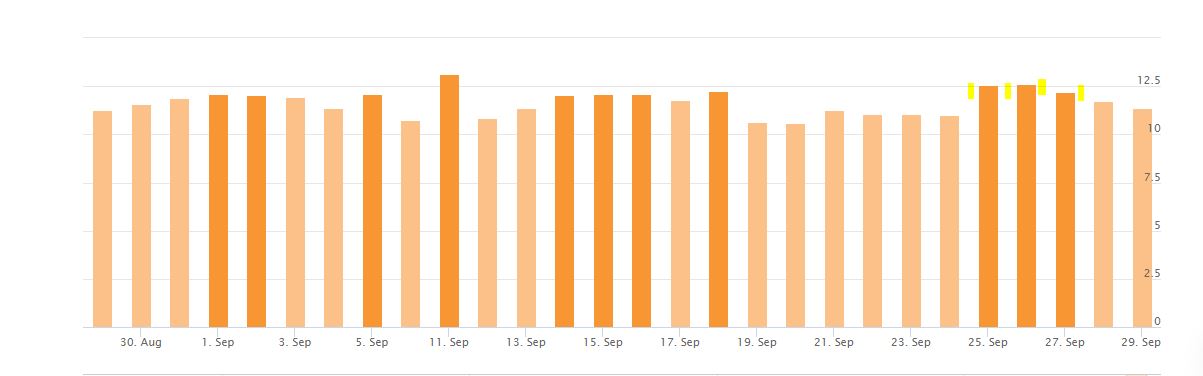

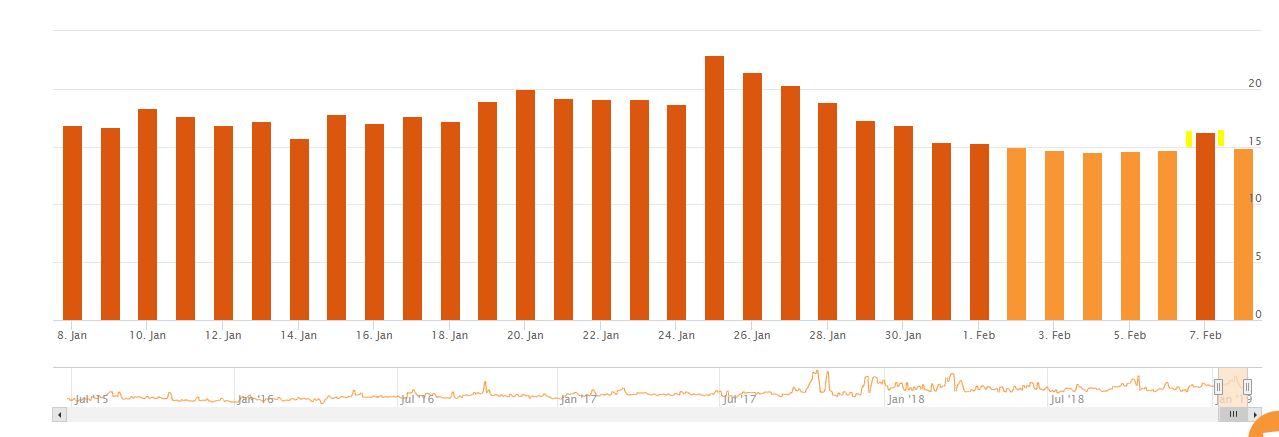

Unlike the other Broad Core Updates launched by Google, the September 2019 Core Update didn’t have a massive impact on websites. However, the algorithm trackers registered fluctuations in SERP.

Moz

SERPMetrics

Algoroo

Accuranker

RankRanger

SEMRush Sensor

September 2019 Core Algorithm Update Pre-announcement

Google once again confirmed, via its SearchLaison official Twitter handle, that a new Broad Core Algorithm update will be rolling out. This is the second time that Google preannounced the rollout of an algorithm update. The last time they did it was before the roll out of the June 2019 Core Update.

The June 2019 Core Update had a major impact on websites that failed to implement the E.A.T Guidelines. However, going by the current pattern, the new update had a far-reaching impact on websites that fail to provide Google with quality signals. In addition to this, the new update was rolled out after Google made three big announcements the previous week – New Nofollow Link Update, Google Reviews and the Key Moments in Videos.

We will keep you posted on how the new broad Core Update impacts the SERP appearance of websites.

Google Search Reviews Updated – September 16, 2019

Before the official Algorithm Update announcement., the last time that the search engine giant issued a public statement was on June 4, 2019, when it rolled out the Diversity Update to reduce the number of results from the same sites on the first page of Google search.

However, on September 16, the official Google Webmaster Twitter account announced that a new algorithm is now part of the crawling and indexing process of review snippets/rich results. According to the tweet, the new update will make significant changes in the way Google Search Review snippets are displayed.

Here is what the official Google announcement says about the update:

“Today, we’re introducing an algorithmic update to review snippets to ease implementation: – Clear set of schema types for review snippets – Self-serving reviews aren’t allowed – Name of the thing you’re reviewing is required.”

According to Google, the Review rich results have been helping users find the best businesses/services. Unfortunately, there has been a lot of misuse of the reviews as there have been a few updates about it from the time Google implemented it. The impact of Google Search Reviews is becoming more and more felt in recent times.

The official blog announcing the roll out of the new Google Search Review algorithm update says it helps webmasters across the word to better optimize their websites for Google Search Reviews. Google has introduced 17 standard schemas for webmasters so that invalid or misleading implementations can be curbed.

Before the update, webmasters could add Google Search Reviews to any web page using the review markup. However, Google identified that some of the web pages that displayed review snippets did not add value to the users. A few sites used the review schema to make them stand out from the rest of the competitors.

Putting an end to the misuse, Google has limited the review schema types for 17 niches! Starting today, Google Search Reviews will be displayed only for websites that fall under the 17 types and their respective subtypes.

List of Review Schema Types Supported for Google Search Reviews

- schema.org/Book

- schema.org/Course

- schema.org/CreativeWorkSeason

- schema.org/CreativeWorkSeries

- schema.org/Episode

- schema.org/Event

- schema.org/Game

- schema.org/HowTo

- schema.org/LocalBusiness

- schema.org/MediaObject

- schema.org/Movie

- schema.org/MusicPlaylist

- schema.org/MusicRecording

- schema.org/Organization

- schema.org/Product

- schema.org/Recipe

- schema.org/SoftwareApplication

Self-serving reviews aren’t allowed for LocalBusiness and Organization

One of the biggest hiccups faced by Google in displaying genuine reviews was entities adding reviews by themselves via third-party widgets and markup code. Starting today, Google has stopped supporting Google Search Reviews for the schema types LocalBusiness and Organization (and their subtypes) that use third-party widgets and markup code.

Add the name of the item that’s being reviewed

The new Google Search Reviews Algorithm Update mandates the name property to be part of the schema. This will make it mandatory for businesses to add the name of the item being reviewed. This will give a more meaningful review experience for users, says Google.

Google Diversity Update Roll Out – June 4, 2019

Just a few days after the incremental June 2019 Core Update, Google officially confirmed that another update is now part of its Algorithm.

The new Diversity Update curbed multiple search results from the same website from appearing on the first page of Google search. Our first impression of this new tweak is that the impact of it was pretty minor.

But discussions are happening in forums about how the update will impact branded queries, which may require Google to list several pages from the same site.

The announcement about the roll-out was made through the official Twitter handle of Google Search Liaison. The tweet read, “Have you ever done a search and gotten many listings all from the same site in the top results?

We’ve heard your feedback about this and wanting more variety. A new change now launching in Google Search is designed to provide more site diversity in our results.”

“This site diversity change means that you usually won’t see more than two listings from the same site in our top results.

However, we may still show more than two in cases where our systems determine it’s especially relevant to do so for a particular search,” reads the official statement from Google.

One of the major changes to expect after the diversity update is with regards to the sub-domains. Google has categorically stated that sub-domains will now be treated as part of the root domain. This will ensure that only one search result appear per domain.

Here is what Google says, “Site diversity will generally treat sub-domains as part of a root domain. IE: listings from sub-domains and the root domain will all be considered from the same single site. However, sub-domains are treated as separate sites for diversity purposes when deemed relevant to do so.”

June 2019 Core Algorithm Update Roll Out

The June 2019 core update was slowly being rolled out from Google’s data centers that are located in different countries. The announcement about the rollout was made from the same Google SearchLiaison Twitter account that made the pre-announcement.The algorithm trackers detected a spike in their graph. This indicated that the impact of the latest broad core algorithm update, which has been officially named June 2019 core update, started to affect SERP rankings.

Since Google has updated its Quality Rater Guidelines a few days back before the roll out with more emphasis on ranking quality websites on the search, the latest update may be a quality patch for the search results page.

We will give you a detailed stat of the impact of the algorithm update on SERP as soon as we get the data from the algorithm trackers. Also, our detailed analysis of the websites hit by the update and the possible way to recover will follow.

June 2019 Core Algorithm Update Preannouncement

Google Search Liaison officially announced that the search engine giant will roll out an important Algorithm Update on June 3rd.The latest Google algorithm update, which will be a Broad Core Algorithm Update like the one released in March, will officially be called the June 2019 Core Update.

It was the first time that Google pre-announced the launch of an Algorithm update.

Here is the official Twitter announcement:

Tomorrow, we are releasing a broad core algorithm update, as we do several times per year. It is called the June 2019 Core Update. Our guidance about such updates remains as we’ve covered before. Please see this tweet for more about that:https://t.co/tmfQkhdjPL

— Google SearchLiaison (@searchliaison) June 2, 2019

Unofficial Google Algorithm Update of March 27th

Yes, you heard it right. Google made some significant changes to the algorithm during the final few days of the month of March.We have seen Google making tweaks after the roll-out of Broad Core Algorithm updates, but the one we witnessed here was huge, and some algorithm sensors detected more significant ranking fluctuation than the one that happened on March 12th when Google launched its confirmed March 2019 Core Update.

The fluctuations that started on March 27th is yet to stabilize, and more and more webmasters are taking it to forums after their website traffic got hit.

The latest tweak has come as a double blow for a few websites as they lost the traffic and organic ranking twice in the same month.

Google Officially Calls March Broad Core Algorithm Update as “March 2019 Core Update”

As I mentioned in my earlier update, Google representatives have a history of snubbing the names given by SEOs for their Algorithm Updates.Usually, their criticism ends without attributing any names to the update but it seems like Google is now easing its muscles and giving official names to their updates.

The official Google SearchLiaison Twitter handle announced on Thursday that Google would like to call the Broad Core Algorithm Update of March 12th as “March 2019 Core Update.“

“We understand it can be useful to some for updates to have names. Our name for this update is “March 2019 Core Update.”

We think this helps avoid confusion; it tells you the type of update it was and when it happened.” Read the tweet posted on the wall of Google SearchLiaison.

With this, let’s put an end to the debate over the nomenclature and focus more on the recovery of the sites affected by the March 2019 Core Update.

Recovering Sites Affected by March 2019 Core Update AKA Florida 2

Have you been hit by the March 2019 Core Update? There are several reasons why a website may lose traffic and rankings after Google rolls out an algorithm update. In most cases, the SEO strategies that the website used to rank in SERP backfires, and in other instances, Google finds a better site that provides superior quality content as a replacement.

In both these cases, the plunge that you’re experiencing can be reversed by implementing a well-thought-out SEO strategy with a heavy focus on Google’s E A T quality.However, the initial analysis that we did has some good news for webmasters. The negative impact of the latest update is far less than what we thought.

Interestingly, there are more positive results, and the discussion about the same is rife across all major SEO forums.

This makes us believe that the Broad Core Algorithm update on March 12 is more of a rollback of a few previous updates that may have given undue rankings for a few websites.

Importantly, we found that sites with high authority once again received a boost in their traffic and rankings.

We also found that websites, which had a rank boost last year by building backlinks through Private Blogging Networks, were hit by March 2019 Core Update, whereas the ones that had high-quality, natural backlinks received a spike.

If you’re one of many websites that were affected by the Google March 2019 Core Update, here are a few insights about the damage caused to sites in the health niche.

Health Niche

According to the data provided by SEMRush, Healthcare websites saw a massive fluctuation in traffic and rankings after the recent March 2019 Core Update.

The website has also listed a few top losers and winners. We did an analysis of the top 3 losers and here is what we found:

MyLVAD

MyLVAD is listed as one of the top losers in the Health category according to SEMRush. The Medic Update hit this site quite badly in August 2018, and it seems like the latest March 2019 Core Update has also taken a significant toll.

According to the SEMRush data, the keyword position of MyLVAD dropped by 11 positions on Mar 13. MyLVAD is a community and resource for people suffering from advanced congestive heart failure and relying on an LVAD implant.

We did an in-depth analysis of the site and found that it does not comply with the Google E A T quality.

The resources provided on the site are not credited to experts. Crucially, the contact details are missing on the site.

Since it’s more like a forum, the website has more user-generated content. As this website falls under the YMYL category, it’s imperative to provide the author bio (designation of the doctor in this specific case) to increase the E A T rating.

Also, details such as ‘contact us’ and the people responsible for the website are missing.

PainScale

The PainScale is a website, which also has an app that helps manage pain and chronic disease. The site got a rank boost after the September 2018 update, and until December that year, everything was running smoothly.

The traffic and ranking started displaying a downward trend after January, and now the Florida 2 Update has reduced it further.

On analyzing the website, we found that it provides users with information about pain management. Once again, the authority of the content published on this website is questionable.

Though the site has rewritten a few contents from Mayo Clinic and other authority sites, aggregation is something that Google does not like.

The website also has a quiz section that provides tools to manage pain. However, the website tries to collect the health details of the users and then asks them to sign up for PainScale for FREE.

Google has an aversion to this particular method, as they are concerned about the privacy of its users. This could be one of the reasons for the drop in traffic and rankings of PainScale after the March 2019 Core Update.

Medbroadcast

This is yet again another typical example of a YMYL website that Google puts under intense scrutiny. Medbroadcast gives a lot of information regarding health conditions and tries to provide users with treatment options.

Here again, like other websites on this list, there is no information regarding the author.

Moreover, the site has a strange structure with a few URLs opening in sub domains. The website has also placed close to 50 URLs towards the footer of the homepage and other inside pages, making it look very spammy.

This site also received undue traffic boosts after the Google Medic Update of August 2018. The stats show that the traffic increased after the Medic Update and started to decline at the beginning of January.

Once again, the impetus is on E A T quality signals. The three examples listed above points to how healthcare sites that failed to follow practices mentioned in the Google Quality Rater Guidelines were hit by the Florida 2 Update.

Here are a few tips to improve your website’s E A T rating:

1. Add Author Byline to All Blog Posts

Google wants to know the authenticity of the person who is providing information to users.

If the site falls under the YMYL category, which includes websites in the healthcare, wellness, and finance sectors, the author should be someone who is an expert in that field. Google wants to ascertain that trustworthy and certified authors draft the content displayed to its users.

Getting the content written by content writers is a trend largely seen among YMYL sites. Nevertheless, Google hates it and only wants to promote high-quality, trustworthy content.

2. Remove Scraped/Duplicate Content

Google calculates the E A T score of a website by analyzing individual posts and pages. If you’re engaging, scrapping or duplicating content from another website, chances are you may be hit by an algorithm update.

As seen in one of the above-mentioned examples, paraphrasing content doesn’t make it unique, and Google can identify these types of content very easily.

So, if you think your website has thin, scrapped or rewritten content, it would be ideal to remove it. For YMYL websites, ensure that the content is written by an expert in your niche.

3. Invest Time in Personal Branding

Make sure that your website has an “About Us” page and you’re providing valuable inputs to Google in the form of schema markup.

In addition, positive testimonials and customer reviews, both within the site and outside, can boost the trustworthiness of your website.

Google’s quality rater guidelines also ask webmasters to display the contact information and customer support details for YMYL sites.

4. Focus on the Quality of Back-Links Than Quantity

The Google search quality guidelines suggest that websites with high-quality backlinks have a superior E A T score.

Investing time in building low-quality links through PBNs and blog comments may invite Google’s wrath and can adversely affect a website’s E A T rating.

It’s highly recommended to use white hat techniques such as blogger outreach and broken link building as part of the SEO strategy to get high-quality links.

5. Secure Your Site With HTTPS

The security of its users is a priority for Google, and that’s why it’s pushing websites to get SSL certified. HTTPS is now one of the ranking factors, and it’s also one of the ways to improve the E A T rating of websites.

Also, it has to be noted that Google Chrome now shows all non-HTTPS sites as insecure, which is a clear indication of how Google values the privacy of its users.

To know more about the factors that Google use to rank health websites, read our in-depth article, “Advanced SEO for Healthcare and Medical Websites: Tips to Improve Search Quality Rating“.

Here are a few rumors from the Black Hat World about the Latest Google March 2019 Core Update AKA the Florida 2 Update

“There are 5 projects of mine which are very, very similar to each other. All targeting Beauty/Health niche, all of them have a lot of great content (10k+ articles) and all of them are build on expired domains with a nice brandable name,” says a user going by the name yayapart.

“The G Core Update hit 4 of my 5 projects. One of them, actually the oldest one of them, got a huge push and increased it’s organic traffic about 40%.

The other 3 projects that are affected lost all their best rankings. I don’t see any pattern here yet but it hit me hard,” he added

Another user going by the name Jeepy says, “Health Niche. This site got hit by medic update and now it’s rising back without doing anything. Right…”

Confirmed: Google March 2019 Core Update AKA Florida 2 Update – March 12, 2019

The official SearchLiaison Twitter handle of Google confirmed that a Broad Core Algorithm update started rolling out on March 12th.Like other broad core algorithm updates, this one was rolled out in phases, which increased uncertainty about the stabilization of the “SERP dance”. SEOs started calling it the Florida 2 Update.

“This week, we released a Broad Core Algorithm update, as we do several times per year. Our guidance about such updates remains as we’ve covered before,” read the tweet post on the official Google SearchLiaison handle.Read our blog to know more about the latest Broad Core Algorithm Update.

Our analysis found that the Google March 2019 Core Update reversed the undue rankings that a few websites got after the Medic Update of August 2018.

In addition to this, most of the sites hit by the update used low-quality links to increase their authority.

See how ranking sensors detected the change:

![]()

![]()

![]()

![]()

![]()

![]()

Google Algorithm Update – March 1st, 2019

In my earlier update, I had predicted that Google was preparing for some big changes. Now, validating my ESP, the latest Google algorithm update rolled out on March 1st, 2019 seemed bigger than what we initially thought. There are reports that Google displayed more than 19 search results on a single page for a few search terms during this period.

There are also murmurs about Google giving more preference to websites that have in-depth content. Interestingly, Dr. Peter J. Meyers of Moz found that Google displays well-researched articles in the results even for a few buyer intent keywords.

Google possibly wants a mix of products and information to feature in its search. This way, users who are confused with products can read and be informed before making the purchase decision.

There are also chatters about the drop in the number of image search results after the latest Google update on March 1st.

An interesting analytics screenshot shared by Marie Haynes depicts how one of her clients got a massive boost in organic traffic during February 27th and March 1st.

See how ranking sensors detected the change:

![]()

![]()

![]()

![]()

![]()

![]()

Google Algorithm Update – February 27, 2019

Google made a lot of tweaks to its algorithm In February 2019 as there was yet again a spike in the Algorithm trackers, suggesting the rollout of an Algorithm Update. A similar spike was witnessed on the 22nd of the month but the chatters soon subsided, probably due to the less impact it had on websites.

However, it seemed like something big was brewing for the coming days that will make significant changes to the SERP results in Google.Accuranker

![]()

Advanced Web Ranking

![]()

MozCast

![]()

Rank Ranger

![]()

SEMRush Sensor

![]()

SERPmetrics

Google Algorithm Update- February 22, 2019

There are talks about an algorithm update, but this time, the impact is not so far-reaching. All major algorithm trackers detected a sudden spike in their ranking sensors, but it didn’t sustain for a long duration.It seems like Google may have done a little tweaking to their algorithm during the weekend, especially on Friday.

Other than a few chatters here and there regarding lost traffic, there is nothing concrete to underscore this as a significant algorithm update.Since it’s widely talked about in blogs and SEO forums, let’s look at a few trackers that sensed the update.

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Google Algorithm Update – February 5, 2019

There is a lot of chatter about an algorithm update from Google that has affected many websites, mostly the ones that are in the UK.The stats were more-or-less calm after Jan 16th, but we have noticed a sudden increase in the stats on all major algorithm update trackers.

Some algorithm trackers suggest the update rolled out during February 5-6 is more devastating than the one rolled out in January.We will keep you posted as we dig deeper into the sites affected by the algorithm update and diagnose the reasons for the drop in rankings.

Here are a few stats:

SERPmetrics

AccuRanker

Moz Weather

Rank Ranger

SEMRush Sensor

![]()

Google Algorithm Update – January 18, 2019

We had announced earlier this week that Google rolled out an incremental Algorithm update – the first in 2019. The impact of the Algorithm Update was hard on News websites and Blogs of various niches. This is why we named the latest Google Update as the “Newsgate Algorithm Update.”

A new document released by Google on 16th January 2019 corroborates our findings as it provides advice and tips for the news publishers to get more success in 2019.Our analysis had found the algorithm update rolled out during the second week of January affected the news sites that “rewrote content” or “scraped content from other sites.”

The latest document released by Google proves that we were right. There are two specific sections in the document titled “Ways to succeed in Google News,” which recommend News Publishers to stay away from publishing rewritten and scraped content.

What the Google document says:

- Block scraped content: Scraping commonly refers to taking material from another site, often on an automated basis. Sites that scrape content must block scraped content from Google News.

- Block rewritten content: Rewriting refers to taking material from one site and then rewriting that material so that it is not identical. Sites that rewrite content in a way that provides no substantial or clear added value must block that rewritten content from Google News. This includes, but is not limited to, rewrites that make only very slight changes or those that make many word replacements but still keep the original article’s overall meaning.

In addition to this, the Ways to Succeed in Google News also highlights a few best practices that News Publishers have to keep in mind before publishing a story.

- Descriptive and Clear Titles

- Displaying accurate date and time using structured data

- Avoid duplicate, rewritten or scraped content

- Using HTTPS for all posts and pages

The advice and tips provided in the document can help news websites affected by the latest Google algorithm update to recover.

Also, Google has put impetus to the transparency of the News Publisher, which has to do more with the search engine giant’s EAT Guidelines.

The new Google News guidelines ask publishers to be transparent by letting the readers know who published the content.

The advice Google gives is to include a clear byline, a short description of the author, and the contact details of the publication.

According to Google, providing these details to the readers and to the Google bot can help in filtering out the “sites or accounts that impersonate any person or organization, or that misrepresent or conceal their ownership or primary purpose.”

Google also warns news publishers not to engage in link schemes that are intended to manipulate PageRank of other websites.

Google Algorithm Update – January 16, 2019

This was Google’s first significant algorithm update for 2019. This time, the target was news sites and blogs!The data from SEMrush Sensor suggests that the “Google Newsgate Algorithm Update” has touched a high-temperature zone of 9.4 on Wednesday.

The speculation about an update was in the air for the last few days. The websites that were hit the worst include, ABC’s WBBJTV, FOX’s KTVU and CBS17.

![]()

Google announced earlier that every year, it rolls out around 500–600 core algorithm updates. In addition to this, there are broad core algorithm updates that Google rolls out three to four times a year.

These updates come with a major rank shift in the SERP with a few websites seeing a spike in organic rankings while others experience a dip.

However, the update made drastic changes to the results shown in the featured snippets.

In addition to the news websites, the “Google Newsgate Algorithm Update 2019” has also affected blogs in niches such as sports, education, travel, government, and automotive sites.

According to the Google Algorithm Weather Report by MozCast, the climate was rough during the 9th and 10th, suggesting an algorithm update.

![]()

The graph has shown significant fluctuations in the weather, especially during January 5th and 6th. After a few regular days, the weather deteriorated further, which may be a signal of two separate Google Algorithm updates within the same week.

SEO communities are rife with discussions about the update as many websites were affected by the algorithm update.

“Travel – All whitehat, good links, fresh content, aged domain, and all the good stuff. Was some dancing around Dec and then, wham, 3rd page,” said zippyants a Black Hat Forum member on Thursday.

“Big changes happening in the serps since Friday for us. Anyone noticing an uptick or downward slide of long-tail referrals? First time we’ve seen much since the big changes in August/September,” asked a user SnowMan68 via Webmaster World.

“Yes! Today, the signals are quite intense. Probably going on for past 4 days No changes seen on the sites though,” answered a Webmaster World user arunpalsingh to one of the questions asked in the forum.

In addition to this, the Google Grump Tool from AccuRanker has also suggested a “furious” last two days. This may be an indication that the algorithm update was rolled out in phases.

![]()

According to our early analysis, the sites that were affected by Google’s first Algorithm Update in 2019 are the ones that publish questionable news.

Also, we saw a nosedive in the traffic of news sites that rewrote content without including any newly added values.

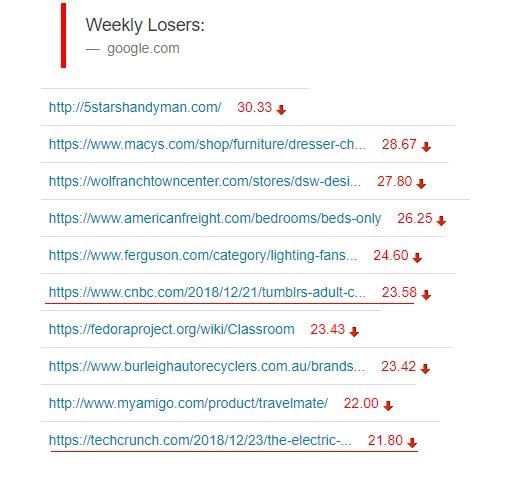

Algoroo, another Google Algorithm tracker, has added TechCrunch and CNBC to the top losers list. This, yet again, stands as evidence to our understanding that the update is intended for News websites and blogs of different industry niches.

In 2018, Google rolled out the infamous Medic Update targeting wellness and YMYL websites. The impact was huge and many websites that were affected are yet to come to terms with the traffic loss.

We found that the sites impacted by the Medic Update were lacking the E.A.T (Expertise, Authority, Trustworthiness) quality signals. A few days after this, Google confirmed the same saying the update had nothing to do with user experience.

Some websites hit by the Medic Update made remarkable comebacks after the algorithm update in November. The sites that recovered from the Medic Update created quality content based on the Google EAT guidelines.

The rollout of the update was completed on Sunday as all the sensors had cooled down by Monday. We will soon do a detailed analysis of the sites that were affected by the “Google Newsgate Algorithm.”

This will help you understand why the sites were affected and how they can recover from the latest Google Algorithm update.

9 Major Google Algorithm Updates before 2018

Whenever there’s a new update from Google, websites are either affected positively or negatively. Discussed below are the major algorithm updates by Google before 2018.

- Panda

- Penguin

- Hummingbird

- Pigeon

- Mobile

- RankBrain

- Possum

- Fred

What is Google Panda Update?

Google Panda update was released in February 2011, which aimed to lower the rankings of websites with thin or poor-quality content and bring sites with high-quality content to the top of the SERPs. The Panda search filter keeps updating from time to time, and sites escape from the penalty once they make appropriate changes to the website.

Here’s a brief of its hazards and how you can adjust to Panda update:

Targets of Panda

- Plagiarized or thin content

- Duplicate content

- Keyword stuffing

- User-generated spam

Panda’s workflow

Panda allocates quality scores to the pages based on the content quality and ranked them in SERP. Panda updates are more frequent; hence, the penalties and recoveries as well.

How to adapt

Keep regular track on web pages to check for plagiarized content and thin content or keyword stuffing. You can do that by using certain quality checking tools like Siteliner, Copyscape.

Are you aware that the Google Panda algorithm from 2012 no longer exists? In fact, it has evolved into a new algorithm named after a new animal – Coati. At first, it was just part of the core ranking algorithm, but it evolved into a new one.

What is Google Coati?

Hyung-Jin Kim, the VP of Google Search, said that while Panda has been “consumed” into the larger core ranking algorithm, Coati was a successor to Panda and is an update to the Panda algorithm. As time has passed, Panda has evolved into Coati. Coati is not a core update, but Panda and Coati are both parts of the core ranking algorithm.

In an interview with Hyung-Jin Kim, he stated that Panda was launched because Google was worried about the direction of content on the web. They make such algorithmic changes to improve search quality and encourage content creators to create better content.

Although it is not crucial to most people, knowing that Google’s Panda algorithm has evolved into a new algorithm called Coati, it still can be interesting for those interested in search engine optimization.

What is Google Penguin Update?

Google’s Penguin update was released in April 2012. It aims to filter out websites that boost rankings in SERPs through spammy links, i.e., purchased low-quality links to boost Google ranking.

Targets of Penguin

- Links with over-optimized text

- Spammy links

- Irrelevant links

Penguin’s workflow

It works by lowering the ranks of sites with manipulative links. It checks the quality of backlinks and degrades the sites with low-quality links.

How to adapt

Keep tracking your profile growth by the links and run regular checks on backlinks to audit the quality of the links. You can use certain tools like SEO SpyGlass to analyze your sites and eventually help you adapt to Penguin’s update.

What is Google Hummingbird Update?

While keywords are still vital, the Hummingbird helps a page to rank well even if the query doesn’t contain exact terms in the sentence.

Targets of Hummingbird

- Low-quality content

- Keyword stuffing

Hummingbird’s workflow

It helps Google to fetch the web pages as per the complete query asked by the user instead of searching for individual terms in the query. However, it also relies on the importance of keywords while ranking a web page in the SERPs.

How to adapt

Check your content to increase the research on keywords and focus on conceptual queries. Additionally, search for related queries, synonymic queries, and co-occurring words or terms. You can easily get these ideas from Google Autocomplete or the Google Related Searches.

What is Google Pigeon Update?

It is the Google update released on July 24, 2014, for the US and expanded to the United Kingdom, Canada, and Australia on December 22, 2014. It is aimed to enhance the rankings of local listings for a search query. The changes also affect the Google maps search results along with the regular Google search results.

Targets of Pigeon

- Perilous on-page optimization

- Poor off-page optimization

Pigeon’s workflow

Pigeon works to rank local results based on the user’s location. The update developed some ties between the traditional core algorithm and the local algorithm.

How to adapt

Put in hard efforts when it comes to off-page and on page-SEO. It is best to start with some on-page SEO, and later you can adopt the best off-page SEO techniques to rank top in Google SERPs. One of the best ways to get yourself listed off-page is in the local listings.

Also, Read>>How to Top-Rank Your Business on Google Maps Search Results

What is Google Mobile-Friendly Update?

Google Mobile-Friendly (aka Mobilegeddon) algorithm update was launched on April 21st, 2015. It was designed to give a boost to mobile-friendly pages in Google’s mobile search results while filtering out pages that are not mobile-friendly or optimized for mobile viewing.

Targets of Mobile update

- The poor mobile user interface

- Lack of mobile-optimized web page

Mobile update workflow

Google mobile-friendly update aims to rank web pages at the top of the SERP that supports mobile viewing and downgrade web pages that are unresponsive or unsupportive on mobile devices.

How to adapt

Tweak the web design to provide better mobile usability and reduce the loading time. Google’s mobile-friendly test will help you to identify the changes to cope up with various versions of the mobile software.

Also, Read>> 6 Common Web Design Mistakes That Hurt Search Engine Optimization of Your Website

What is Google RankBrain Update?

As reported by Bloomberg and Google, RankBrain is an algorithm update that is a machine-learning artificial intelligence system launched to process the search engine results efficiently. It was launched on October 26th, 2015.

Targets of RankBrain

- Poor user-interface

- Insubstantial content

- Irrelevant features on the web page

RankBrain workflow

RankBrain is a machine learning system released to understand the meaning of the queries better and provide relevant content to the audience.

It is a part of Google’s Hummingbird algorithm. It ranks the web pages based on the query-specific features and relevancy of a website.

How to adapt

By conducting competitive analysis, optimize the webpage for comprehensiveness and content relevancy. You can use tools like SEMRush, Spyfu to analyze the concept, terms, and subjects used by the high-ranking competitor web pages. This is a perfect way to outmatch your competitors.

What is Google Possum Update?

Possum was the Google algorithm update released on September 1st, 2016. It is considered to be the most significant algorithm update after the Pigeon, 2014 update. The update focused on improving business rankings that fell out of physical city limits and filtering business listings based on address and affiliations.

Targets of Possum

- Tough Competition in your target location

Possum’s workflow

The search results are provided depending on the searcher’s location. The closer you are to a given business, you are more likely to see it at the top of the local search results. Fascinatingly, Possum also provided the results of well-known companies located outside the physical city area.

How to adapt

Increase your keywords list and do location-specific rank tracking. Local businesses should focus on more keywords because of the volatility Possum brought into the SERPs.

What is Google Fred Update?

Fred is the Google algorithm update released on March 8, 2017.

Targets of Fred update

- Affiliate heavy-content

- Ad centered content or

- Thin content

Fred’s workflow

This update targets the web pages violating the guidelines of Google webmaster. The primary web pages affected by this are blogs containing low-quality content and majorly targeting the audience to make revenue by driving traffic.

How to adapt

Remove thin content by analyzing it through Google Search Quality Guidelines. If you’re allowing advertisements on your pages, make sure they’re on the pages with high-quality and useful content for the users. Please don’t try to manipulate Google into thinking that your page is high-quality content, when it is, instead, full of affiliate links.

What is the latest Google Algorithm Update, is a question that SEOs search the most nowadays. The major reason for the “Google Algorithm Update” becoming such a trending keyword is due to the uncertainty caused after the rollout of each update. Google rolls out hundreds of core algorithm updates each year and the search engine giant announces a few that have a far-reaching impact on the SERP.

Each time Google updates its algorithm, it’s moving a step forward in making the search experience easy and more relevant to the users. However, as SEO professionals, we recommend to use white hat techniques

Maccabees Update

If you happened to be an owner of such a website, then you may have been a victim of ‘Google Maccabees Update.’

This update had hit hundreds of websites, and the reason behind it was the fact that these websites had multiple pages filled with huge keyword permutations. The update was framed to catch the long-tail keywords used with permutations because search results prefer pages with long-tail keywords. The majority of the sites hit by Maccabees are from e-commerce, affiliate websites, travel, and real estate sites.For instance, a travel agency has multiple keywords, and a few of them that were flagged are as follows:

- Low-cost holiday package to Switzerland.

- Cheap Switzerland holiday package.

- Low-cost tickets to Switzerland.

Similarly, an affiliate website has multiple pages containing the following:

- Avoid mosquitoes at home.

- Get rid of mosquitoes.

- Wipeout mosquitoes.

- Wipeout mosquitoes fast.

You may guess why they were aiming for long-tail keywords and why SEO had such keen eyes for those keywords. Because, though they seem similar, all keywords are huge traffic drivers.

There is no formal name for this update. However, informally, in remembrance of Hanukkah and the search community, Barry Schwartz of SERoundtable, called it ‘Maccabees.’

A Google spokesperson stated that the changes in the algorithm are meant to make the search results more relevant. The relevance may come from on-page or off-page content, or sometimes, both.

What is a Google Broad Core Update?

A broad core update is an algorithm update that can impact the search visibility of a large number of websites. Each time an update is rolled out, Google reconsiders that SERP Ranking of websites based on expertise, authoritativeness, trustworthiness (E-A-T).

- Unlike the daily algorithm updates, the broad core update comes with a far-reaching impact.

- Fluctuation in ranking positions can be detected for search queries globally.

- The update improves contextual results for search queries.

- There is no fix for websites that were previously hurt by Google update.

- The only fix is to improve the content quality.

- Focus more on Expertise, Authority and Trustworthiness (E.A.T)

To know more about what is a broad core algorithm update, check our in-depth article on the same. We will provide you with in and out of the new update in a short while. Please keep a tab on this blog.

Future of Google Algorithm Updates

As we all know, the Google organic search is on a self-induced slow-poison! How many of you remember the old Google’s search results page, where all the organic search results were on the left and minimal ads on the right? Don’t bother, remembering isn’t going to make it come back!

If you’ve been using Google for the last two decades, then the transformation of Google Search may have amazed you. If you don’t think so, just compare these two screenshots of Google SERP from 2005 and 2019.

2005

2019

Google started making major changes to the algorithm, starting with the 2012 Penguin update. During each Google Algorithm Update, webmasters focus on factors such as building links, improving the content, or technical SEO aspects.

Even though these factors play a predominant role in the ranking of websites on Google SERP, an all too important factor is often overlooked!

There has been a sea of changes in the way Google displays its search results, especially with the UI/UX. This has impacted websites more drastically than any other algorithm update that has been launched to date.

In the above screenshot, the first fold of the entire SERP is taken over by Google features. The top result is Google Ads, the one next to it is the map pack, and on the right, you have Google Shopping Ads.

The ads and other Google-owned features that occupied less than 20% of the first fold of the SERP Page now take up 80% of it. According to our CTR heatmap, 80% of users tend to click on websites that are listed within the first fold of a search engine results page.

This is an alarming number as ranking on top of Google SERP can no longer guarantee you higher CTR because Google is keen to drive traffic to its own entities, especially ads.

Since this is a factor that webmasters have very little control over, the survival of websites in 2022 and beyond will depend on how they strategize their SEO efforts to understand the future course of the search engine giant.

When talking about how Google Algorithm Updates might work in 2022, it’s impossible to skip two trends – the increasing number of mobile and voice searches. The whole mobile-friendly update of April 2015 was not a farce, but a leap ahead by the search engine giant that would eventually make it a self-sustained entity.

We will discuss voice and mobile search in detail a bit after as they require a lot of focus.

Algorithms will Transform Google as a Content Curator

If you dig into the history of search engines a little deeper, you’d know that Yahoo started as a web directory that required entering details manually. Of course, this wasn’t a scalable model. On the other hand, Google’s founders decided to build algorithms that can fetch the data and store it for the future. However, Google later realized that their model can be turned into one of the most ROI-generating ones.

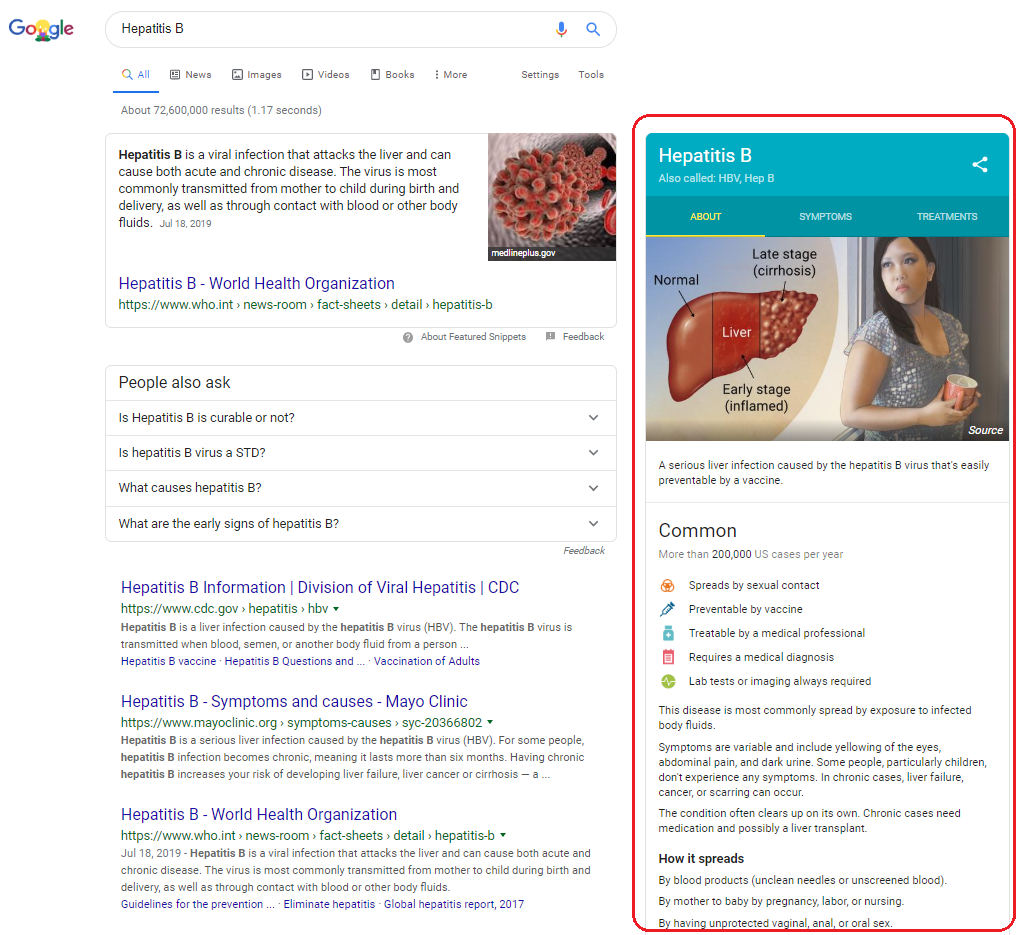

Just Google “Hepatitis B” and you will find a knowledge graph on the right that is autogenerated by Google.

This particular information about Hepatitis B is generated by Google’s Learning Algorithm by stitching together data from authority websites. According to Google, this medical information is collected from high-quality websites, medical professionals, and search results.

With Google being the repository of important web pages that users value, you can expect more such self-curated content in Google search. It’s interesting that even the creative used in such results are created by Google. Another example of Google doing self-attribution.

Here is Another Example of Google Curating Content

A Google search for “how tall is the Eiffel tower?” will display a knowledge card with the exact answer to the user’s question, without any attribution.

But further scrutiny into the SERP, especially the right-side Knowledge Graph, will help you find out how Google came up with the answer.

This is an indication of how critical the structured data is. However, structured data is a double-edged sword as Google uses it on SERP (like in this case) with zero attribution.

Google Algorithms will Stick to Its Philosophy, But with a Greedy Eye

If you think Google is fair and isn’t greedy, here is something that you may have missed in the earlier screenshot.

The way Google is moving ahead seems like the algorithms will scatter ads clandestinely within the SERP to direct more traffic to promoted/sponsored content. Basically, Google is taking data from your website, repurposing it for the knowledge graph, and getting monetary benefits, which ultimately do not reach you. However, taking into account Google’s current position, this has to be seen as a desperate move!Google was petrified by the decrease in the click-through rates received for results on mobile devices and did everything possible to get their million-dollar advert revenue back on track. And one such step was what we now call Mobilegiddon. The mobile-first indexing approach introduced in 2015 was a silent threat to websites asking them to either toe the line or be ready to be pushed to the graveyard (second and following pages of Google search.)The perk that Google earned from this strategy is that it saved the amount of time and effort to update its algorithm to crawl and render both mobile and desktop versions of websites. So, with mobile-first indexing in place, Google decided to use mobile-first UI/UX. Here is how

(Screenshot of the Mobile-first design of Google)

E.A.T (Expertise, Authority, Trust)

Google has been quite vocal about maintaining the E A T standard. Even though this is now more focused on websites in the YMYL category websites, moving forward, the Algorithms will become smarter and start using it for all niches.

Online reviews, social mentions, brand mentions, and general sentiments across the web will play a vital role in ranking websites on Google.

No-follow and UGC Links Will Pass Link Juice

It was a while since Google made any major announcement with regard to links. However, 2019 saw the search giant adding two new attributes to the links in addition to the do-follow and no-follow.

UGC and Sponsored are the two new link attributes that have become part of the Google ranking factors. A majority of the sites use the no-follow as the default attribute for external links. This is one reason why Google introduced the two new link attributes. Moving forward, no-follow and UGC links will start passing link juice. Even though the importance of these links is not as significant as the do-follow, they definitely play a vital role.

When it comes to the promoted links, Google will ensure that its algorithm completely ignores passing the link juice. Google has asked the webmasters to start using these attributes as early as possible as they have become a part of the Google Ranking Factors in March 2020.

[adrotate banner=”4″]

You May Also Like

Google Algorithm Upate 2020

Older Google Algorithm Updates: 2023, 2022, 2021, 2019, Other Updates Google Algorithm Update – December 2020 Core Update On December 17th, Google announced that the rollout of the December 2020 Broad Core update is finally over. The December 2020 Core Update rollout is complete. — Google SearchLiaison (@searchliaison) December 16, 2020 The announcement about the update came two weeks back … Google Algorithm Upate 2020

Google Algorithm Update 2021

Older Google Algorithm Updates: 2023, 2022, 2020, 2019, Other Updates Product Review Update December 2021 Google has launched the second product review update for 2021, and it’s intended to help websites that offer in-depth reviews that help users make an informed buying decision. Earlier, in April, Google had launched a similar update. Sites with shallow reviews that add no value … Google Algorithm Update 2021

Google Algorithm Update 2022

Older Google Algorithm Updates: 2023, 2021, 2020, 2019, Other Updates December 2022 Link Spam Update Rollout Completed Announced on 14th December, the December 2022 Link Spam Update took close to a month to complete the rollout. On January 12th, Google officially announced that both Link Spam Update and Helpful Content update rollout has been completed. In fact, both these updates … Google Algorithm Update 2022

Comments